FatdogArm now runs Xorg!

FatdogArm reaches its first milestone - it is now running X desktop! Sure the only apps available is xterm and xclock, but that's a good start

On the down side, kernel 3.11 is now in rc3; but linux-next still shows no sign that XFS will be compatible with user namespace anytime soon (the UIDGID_CONVERTED still makes XFS = n)

No comments - Edit - Delete

Native compiler article is up

Chapter 6 of LFS is done, I now have a usable command-line root filesystem; and that's the end of LFS for me (I don't need the rest of the chapters on booting and initscripts because I can already boot the system and I already have initscripts from Fatdog).I'm now on the first item on my (revised) roadmap - building Xorg packages.

Meanwhile, I have written the article on preparing the hard-float native compiler here.

No comments - Edit - Delete

FatdogArm initrd article is up

I'm now at the middle of LFS Chapter 6. I have completed the final distribution-ready hardfloat native compiler (with the proper name this time - arm-unknown-linux-gnueabihf) and am using it to build the rest of the packages in Chapter 6.While waiting for them to build, I managed to write the next article about using initrd with the ARM build here.

No comments - Edit - Delete

Native compiler working

I have completed Chapter 5 of LFS and have a working hard-float native compiler. I am now at early steps of Chapter 6, working my way towards getting distribution version of the toolchain. Looking good!And 1Hz of ARM cycle is definitely not worth 1Hz of x86 cycle. My 1GHz A10 feels like 600 MHz Celeron

Took me 3.5 hours to build gcc and I had to do it a couple of times since LFS is well, not exactly targeted towards ARM - plus I changed direction from the default soft-float to hard-float in the middle of the compile --- and I had to do that 5 times

Took me 3.5 hours to build gcc and I had to do it a couple of times since LFS is well, not exactly targeted towards ARM - plus I changed direction from the default soft-float to hard-float in the middle of the compile --- and I had to do that 5 times

This is looking more and more attractive now, but I'm a little tight with the budget right now so that'll have to wait.

I'm logging the final installs with paco. I'm still considering what package management to use, since paco doesn't support pulling packages from remote location (paco and gpaco is for managing local packages only). I have slapt-get and gslapt in mind, but I will have to think further.

No comments - Edit - Delete

ARM First Boot

Following my post here, I have published the first article of my experiments in porting Fatdog to the ARM platform. It is a work in progress, which means contents are not stable and will be modified / re-arranged / re-organised as the result reveals itself in time.Boy, it took longer to write the article than to actually did the experiment!

It is one thing to write rants on blog but it is another thing to write proper documentation on the wiki! I may need to encourage myself to continue to write!

It is one thing to write rants on blog but it is another thing to write proper documentation on the wiki! I may need to encourage myself to continue to write!

No comments - Edit - Delete

How to run Slacko Puppy side-by-side with Fatdog64

Somebody asked me recently whether it is possible to run Slacko Puppy side-by-side (or along side) Fatdog - that is, without the need for dual-booting etc.It is possible.

In my original response I provided the outline steps of how to do so, but now I thought others may be interested too - so I decided to write an article about it. The article is here.

Enjoy.

2 Comments - Edit - Delete

The beginning of Fatdog ARM

A year ago, most of the people on Puppy Linux forums were taken over by the hype of ARM-based systems. (Some still do). It all started when Barry Kauler, the father of Puppy Linux, got hold of a cheap ARM-based "smart media player" (Mele A1000) which was in fact more powerful that many PCs of yesteryears. Equipped with 512MB RAM and 1GHz ARMv7 CPU, it came with Android and was advertised as a media player, but its wealth of ports and its flexibility (ability to boot directly from SD card - practically unheard of in ARM/embedded world before this) made it ideal for a possible "desktop replacement". By the time we heard about it, somebody has already managed to run Ubuntu ARM on it. Imagine, a standard Linux distribution running on a ARM media player! That was in fact very exciting.Barry started an ARM port of Puppy Linux for Mele, his first release was Puppy "Lui" (see http://bkhome.org/blog/?viewDetailed=02823, http://distro.ibiblio.org/quirky/arm/releases/alpha/README-mele-sd-4gb-lui-5.2.90.htm). Everyone was on ARM-frenzy for a (long) while. Not long after that, Raspberry Pi (Raspi for short) came, and the folks got even more excited. Barry built another ARM port for Raspi (the Mele one didn't because Mele's CPU is ARMv7 while Raspi's one is ARMv6), called "sap6" (short for (Debian) Squeeze Puppy for ARMv6 - see http://bkhome.org/blog/?viewDetailed=02865). He released a few versions of sap6 (Raspi being more popular than Mele despite its obvious shortcomings), but that was that - Barry moved on to other things (he is planning to return to it, though, he got a quad-core Odroid board late last year).

I was caught in the frenzy too, for I can see the possibilities of where this can go, provided that ARM can fulfill the promise of being the low-power, low-cost, ubiquitous computers. For example, it can easily replace traditional desktops for those who can't afford it. We are yet to see the promise fulfilled, but it is still going in that direction so I'm happy. The tablet market (where most of these ARM cpus are going) has been going strong, and despite many misgivings about tablets, if one can add a keyboard and mouse to it, many tablet users can actually become productive. All in all, it is about alternative computing platform for the masses.

To start with, I experimented with Qemu. I documented the process of running sap6 under Qemu, for those who wanted to play/test sap6 but haven't got Raspi on their hand: http://www.murga-linux.com/puppy/viewtopic.php?t=79358.

That little experiment quickly followed with my attempt to cross-build a minimal system from scratch, still targetting Qemu. There was one system that aimed to do so, called "bootstrap linux", that uses musl C library (then brand-new). After a few hurdles and many helpful advices from musl mailing list, I got it up and running (see https://github.com/jamesbond3142/bootstrap-linux/). That experiment taught me about complications of building compilers (gcc) by hand and that small, unforeseen interactions between host tools and compiler build scripts can result in hard to find, hard to debug crashes on the target system (see http://www.murga-linux.com/puppy/viewtopic.php?t=78112&start=30).

Of course, along the way I got to learn to do some cross-debugging and reviewed ARM assembly language on cross-gdb. That brought some good memories of the days I spent writing 386 assembly language for a bare-metal protected mode 386 debugger myself, for a certain 32-bit DOS extender :)

Qemu was nice but in the end I knew I needed a real hardware: compiling gtkdialog which took less than 10 seconds on my laptop took more than 10 minutes on Qemu on the same laptop. To that end, I decided to go for Mele A1000 too. That was mid last year, and apart from booting Puppy Lui on it, that Mele didn't do much for months on end.

Until now.

In the last few days I have built a new kernel and a minimal busybox-based system for it, I've got it running with framebuffer console on my TV. I used Fatdog64's initrd (busybox is suitably replaced with its ARM version) and it felt good to finally see "Fatdog" booting on an ARM cpu.

In the next few posts I will write more about these, the steps, the information I have collected (linux-sunxi has grown from useless to extremely helpful in a year) as well as future roadmaps.

No comments - Edit - Delete

Wiki

Looking back at this blog, I have found that some articles are getting uncomfortably long. Long articles present two problems on this blog:1. It is not easy to read technical articles in the font of my choosing.

2. It is not easy to write (bbcode, which is used on this blog, isn't exactly the most writer-friendly markups).

I don't want to change the font. When I started this blog, I didn't plan that all of its contents will be technical (and it still isn't - it's just that I haven't got the time or inclination to write non-technical posts yet) - thus the choice of casual fonts.

Instead, I have started another sub-site to house longer articles. This blog will just serve as announcements and links to them.

I have two software under consideration (ah, the spoils of open source!): yawk (a wiki software) and grutatxt (a markup processor). While a wiki obviously isn't the same as a markup processor, for my intent and purposes they are identical since the wiki will be read-only.

Grutatxt has the benefit that its source markup is more readable than yawk (or other wiki's for that matter), but yawk definitely has more features (automatic navigation, etc) that I wouldn't want to handle manually. Both are relatively easy to write with, but their markups are largely incompatible. Grutatxt uses perl which is available in most places, yawk uses - well, awk, but it has to be a specific version of awk (GNU Awk 3.1.5+). Grutatxt has a companion software called Gruta that provides full-fledged Content Management System (CMS) (which meets of exceeds what yawk can do) but that will be for consideration at a later date.

For now, I'm using yawk (because that's what I started with before I found grutatxt), but I actually have both systems online and will decide which one I will use later.

Click here to see the wiki.

No comments - Edit - Delete

Power management for AMD radeon driver is coming!

This is really good news!As I said here, my laptop runs 20 degrees centigrade hotter with open-source radeon compared to the proprietary Catalyst driver.

Apparently AMD is listening, they have just pushed 165 patches; among them is the dynamic power management similar to what Catalyst has.

Looking forward to Linux 3.11

No comments - Edit - Delete

Bluez A2DP AudioSink for ALSA

Ok, here is the promised follow up for my previous post.I call it A2DP AudioSink for ALSA because at the moment that's all it can handle (which means it will not support HFP devices such as handsfree headset etc). That would not be necessary anyway because the existing ALSA PCM plugin (if you run bluez in socket mode) already supports bi-directional streaming with these devices. It is A2DP which is a problem.

Despite my rants about the quality of bluez DBus API documentation, it is actually quite complete and thorough when it comes to listing the available functions and their parameters. So I will not repeat that information here; I suggest that you download bluez 4.101 source tarball and look at its /doc directory, particularly audio.txt and media.txt (you can look at it online too here).

Instead, I will summarise the critical missing information that is necessary for your to build your own A2DP Sink/Source.

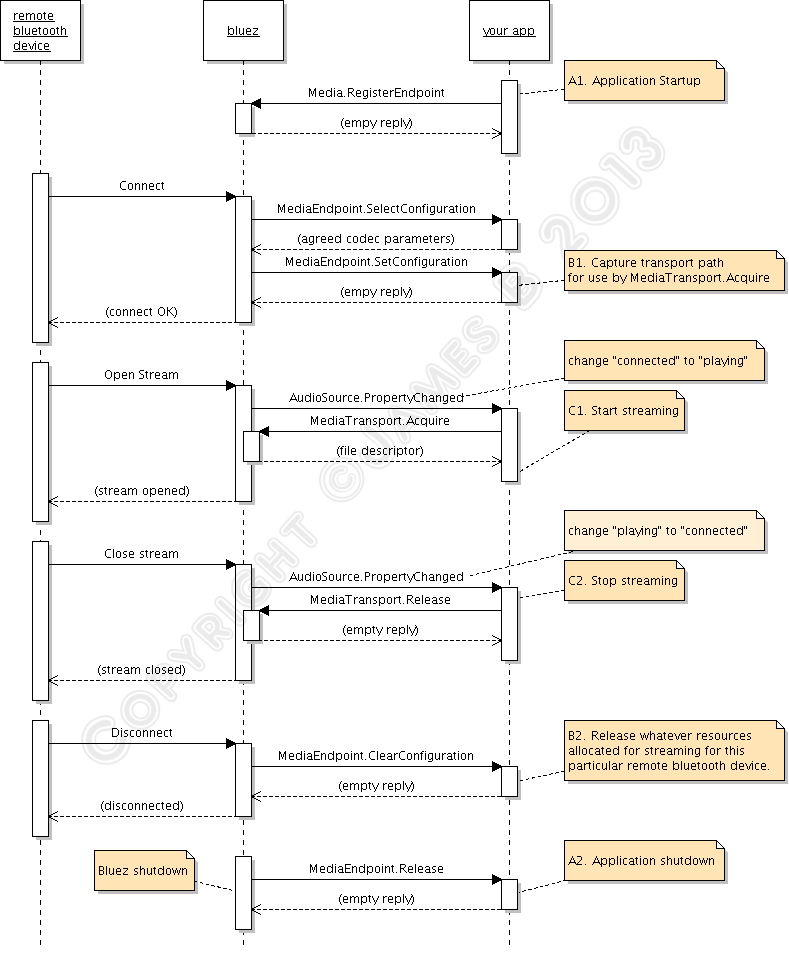

Let's start with the sequence of events that happens from the time of application startup, device connection and disconnection, until application shutdown. Instead of "A2DP AudioSink for ALSA" which is a mouthful, I will just call it as "your application" (or "your app", or even "you" for short) - I'm assuming here that you're reading this because you want to do your own stuff. Otherwise why bother, right?

Ok, here we go.

First, the caveat: the "bluetooth device" <--> "bluez" part of that diagram must be taken with a grain of salt, as it is not accurate. If you want to know the details you need to consult A2DP and GAVPD specifications. It's there so that you can see the big picture of what is happening.

You can see that there are 3 levels of events that happen during the lifetime of the your app. I have marked these as A, B, and C. Level A are the highest level events, these are startup/shutdown events and activities. Level B are events and actions that you must do when a remote bluetooth device is connected or disconnected. Level C are the actions you must do to carry out the actual audio streaming.

"Level A" events/actions (A1 and A2)

There are the events/actions you must do/handle when your application is starting up or shutting down. These actions/events only need to done once.

A1. Application Startup. Upon starting up, you need to tell bluez that your app will handle A2DP Sink or Sources for it. You do it by calling org.bluez.Media.RegisterEvent with the appropriate parameters, mainly UUID, Codec, and Capabilities. Bluez documentation doesn't make it clear, but you cannot just plug arbitrary made-up values here. "UUID" must be one of the pre-defined "Service Class identifiers" (from here), you want either AudioSource or AudioSink UUID. "Codec" must be one of the available supported codecs from A2DP specification, and the "Capabilities" must be filled with the particular codec's capabilities that you want to support.

If the registration is successful, you'll get an empty reply otherwise you'll get an error.

A2. Application Termination. Assuming you have successfully registered, bluez will notify you that your registration has been cancelled. This usually only happens the the bluetooth daemon itself is about to shutdown. Bluez does it by calling org.bluez.MediaEndpoint.Release method, which you must implement and handle (don't you wish now that bluez documentation differentiates between real "API" calls and "callback" interfaces, like this one?

). At this stage you don't need to de-register or do any other cleanup with bluez, you just need to clean-up your own resources. Reply with a blank message, and after that you are free to terminate your app.

). At this stage you don't need to de-register or do any other cleanup with bluez, you just need to clean-up your own resources. Reply with a blank message, and after that you are free to terminate your app.

"Level B" events/actions (B1 and B2)

There are the events/actions that happen / you must do when remote bluetooth devices get connected. It can happen multiple times within the lifetime or your app (ie between Level A events), for the same devices (in pairs), and for different devices (may be overlapping).

B1. Device Connection Events happen when a remote bluetooth device is connected. Assuming that your registration is successful, bluez will call your app again when an A2DP device is trying to connect to the computer. It does it using org.bluez.MediaEndpoint.SelectConfiguration. You will need to implement this method and interface and handle the call. Through this call, bluez will pass you some "Capabilities" codec parameters from the other end. You are supposed to compare this with your own capabilities and choose the best match that provide the highest quality audio. Your reply to bluez will contain the this chosen configuration.

If everything is all right, bluez will then call your app again, using org.bluez.MediaEndpoint.SetConfiguration. The parameter to this call should contain exactly the same codec parameters you gave back earlier in your reply to "SelectConfiguration". Among other things, the most important thing you must do here is this: you must record the "transport path" given as parameter of this call. It is a unique object path that you need to pass along to org.bluez.MediaTransport.Acquire to get the file descriptor you need to use for the actual streaming. If you don't keep that path, you can't find it again. All being good, you reply with empty message.

B2. Device Disconnection Events happen the remote bluetooth device is disconnected. Bluez will call you on org.bluez.MediaEndpoint.ClearConfiguration method. You are supposed to clear any of your resources you keep for that particular bluetooth device connection (ie, that particular "transport path"). Reply with a blank message.

"Level C" events/actions (C1 and C2)

These are the events/actions that happen / you must do to do the actual audio streaming. It can happen multiple times within "Level B" events for the same remote device, usually in pairs.

C1. Start streaming event. To detect this event, you must listen to org.bluez.AudioSource.PropertyChanged signal and keep track of its "State" property. The "start streaming" event happens when the state changes from "connected" to "playing". (There are a few other events too, which may be interesting for other purposes but not for us).

When this happens, you need to call org.bluez.MediaTransport.Acquire. Bluez will give you a file descriptor that you can read from, as well as its Read MTU (maximum transfer unit) - which is how big each packet would be. From here onwards, you can read this descriptor to obtain the A2DP packet, decode it, and output it. The Read MTU helps to determine how big a buffer you need to allocate. Note that the read isn't always successful, you must allow for error conditions such as EAGAIN because your CPU will be much faster at reading than what bluetooth (and the remote device) can send.

C2. Stop streaming event. Like "start streaming event", you can't decide this from org.bluez.AudioSource.PropertyChanged signal alone; you need to detect the transition, which is "playing" to "connected". When this happens, you need to call org.bluez.MediaTransport.Release to release the transport back to bluez. In my tests, this is not strictly necessary but it is the polite way of doing it. It is also good for you to detect this event so that you can can tell our "streaming" function to stop its work and rest for a while.

That's it! Easy peasy eh?

How about A2DP Source?

The events described above are to for you to make your computer act as A2DP Sink (or "Source", in bluez' parlance). What about building A2DP Source (the computer to send audio data to bluetooth speakers)? As it turns out, the sequence of events is exactly the same with very minor change:

1. Instead of AudioSource.PropertyChange, you need to listen to AudioSink.PropertyChange.

2. The transition you need to detect is a bit different - instead of "connected" -> "playing" (and vice versa), you listen to "disconnected" -> "connected" (and vice versa).

3. You write to the descriptor with encoded data instead of reading from it.

About The code

In the source, I create a thread for doing the actual streaming (reading/writing to the file descriptors). I create the thread when I received B1 event (SetConfiguration) but they are suspended until I receive C1 event - that is, after I have completed MediaTransport.Acquire call to get the file descriptor. I suspend the thread again when I receive C2 event, and only when I receive B2 event (ClearConfiguration) I terminate the thread.

The rest is straightforward. The code implements both Sink and Source. As you can see, the difference in handling is minimal.

The code is provided as an illustration and working example. It skimps on error checking; it focuses neither on performance nor robustness, but more on the working (and hopefully correct) way of handling A2DP connection under bluez. That being said, I find that the Sink is good enough, while the Source is a bit unsatisfactory. There is a README inside the tarball that shows how you can setup ALSA asoundrc for use with the A2DP Source so that it can act as a poor man's ALSA PCM plugin.

As usual, the code is released under GNU GPL Version 3 or later unless the bits that I took from PulseAudion and bluez itself (SBC stuff, SBC setup stuff, and actual A2DP packet encoding/decoding) - they are licensed as per the original PulseAudio and bluez licenses.

Get it from here.

Bluez 5 and beyond

Question: Bluez 4.x is already obsolete by now. What do I have to do to get this example to work with bluez 5?

Answer: A lot of work.

I have not investigated bluez 5 version of this fully as I'm quite satisfied with bluez 4 for now. But from what I have gathered, the sequence of events is identical. Sure the DBus interfaces change their names (bluez 5 add "1" to the interface names, e.g. "org.bluez.MediaEndpoint" becomes "org.bluez.MediaEndpoint1"); and the signals change their skins too (AudioSource/AudioSink are gone, replaced by generic org.freedesktop.Properties.PropertyChanged, and you can probably decide whether to start/stop streaming directly from the state instead of having to watch the transitions), but the underlying events are still the same.

I have not investigated bluez 5 version of this fully as I'm quite satisfied with bluez 4 for now. But from what I have gathered, the sequence of events is identical. Sure the DBus interfaces change their names (bluez 5 add "1" to the interface names, e.g. "org.bluez.MediaEndpoint" becomes "org.bluez.MediaEndpoint1"); and the signals change their skins too (AudioSource/AudioSink are gone, replaced by generic org.freedesktop.Properties.PropertyChanged, and you can probably decide whether to start/stop streaming directly from the state instead of having to watch the transitions), but the underlying events are still the same.

Conclusion

A2DP is just a small part of Bluetooth specification. If you look at the links I gave earlier, you will see Bluetooth comes with over two dozen "profiles" (ie, functionalities). Bluez doesn't implement all of them (although the unimplemented list is getting smaller very day, thanks for the very hard work of bluez developers), which is fine, but bluez could really do better with its documentation. At least give us userspace programmers something to get around our head on. Until that happens, I still consider that "bluez is one of the best kept secrets in Linux".

19 Comments - Edit - Delete